AI and Machine Learning in OT Cybersecurity: Current Trends

Team Shieldworkz

7. Oktober 2025

AI and Machine Learning in OT Cybersecurity: Current Trends

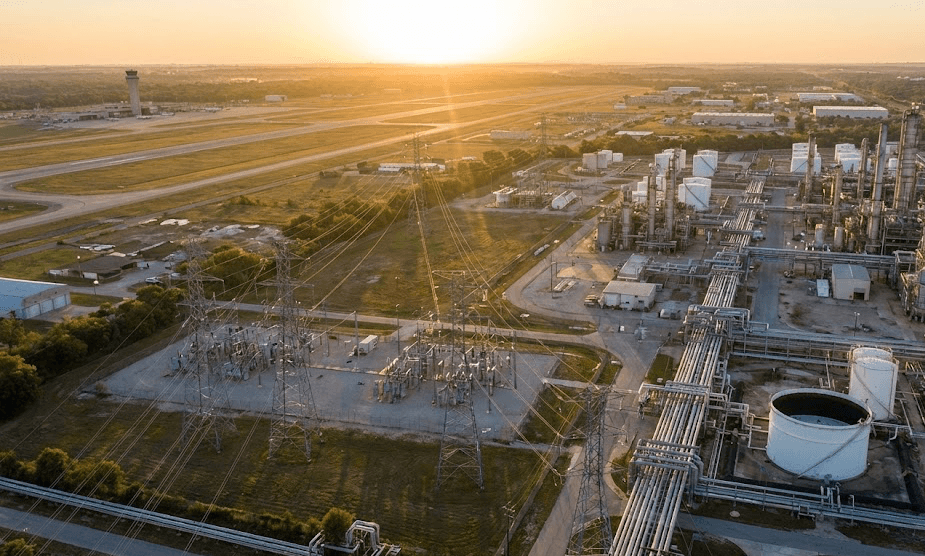

AI and Machine Learning are reshaping how organizations detect threats, predict failures, and respond to incidents - and OT Security is no exception. As industrial sites connect more sensors, remote access tools, and IoT devices, the attack surface grows and adversaries gain new capabilities. You’re probably asking: Which AI/ML tools actually help protect my plant? What new threats should I watch for? And how do we introduce these technologies without disrupting production?

This blog answers those questions in plain language. We’ll walk through the real-world use cases for Machine Learning in OT, the ways attackers are leveraging AI, practical limitations and risks, and a clear playbook you can apply at the site level. Where appropriate, we highlight recent findings from industry threat reports so you can prioritize what to act on first. By the end you’ll have tactical steps for improving detection, response, and resilience - and a straightforward way Shieldworkz can help you implement them safely.

Why AI/ML matter for OT Security today

AI and ML matter in OT because they scale analysis of telemetry and uncover subtle anomalies that rule-based systems miss. Traditional signature-based tools struggle with industrial protocols, noisy sensor data, and the slow change patterns you see in control systems. ML models can learn normal process behavior and flag deviations that imply compromise or equipment degradation.

At the same time, AI gives attackers a force multiplier. We’re seeing automated reconnaissance, faster exploit development, and social-engineering campaigns tuned by large language models. That combination - better defensive tooling and smarter offense - means staying static is not an option. Recent industry reports show a noticeable rise in incidents that target OT systems, underscoring the urgency for modern detection and response approaches.

Top use cases for Machine Learning in OT

Anomaly detection on process telemetry

ML excels at learning what “normal” looks like for flows, temperatures, set points, and interlocks. Unsupervised models (e.g., autoencoders) and time-series models (e.g., LSTM, GRU) can identify subtle drifts, sudden shifts, or sequence violations that are hallmarks of incidents. These systems reduce noisy alerts and surface signals you’d otherwise miss.

Predictive maintenance and failure forecasting

By analyzing historical sensor data, ML can predict equipment failure windows. That gives you lead time to plan maintenance during a scheduled downtime - reducing the risk of unsafe, unplanned interventions that could be exploited by attackers.

Network and lateral movement detection

ML applied to flow metadata and device behavior helps spot lateral moves across OT subnets. Models tuned to OT traffic patterns can distinguish between legitimate engineering access and suspicious scanning or command injections.

Alert prioritization and analyst augmentation

AI can triage noisy alerts, correlate events across IT/OT, and propose likely root causes. This makes SOC and plant staff more effective - especially where OT expertise is scarce.

Threat hunting and enrichment

ML helps cluster telemetry anomalies with threat intelligence to reveal campaigns or tools that target industrial environments. It helps you move from reactive to proactive defenses.

How attackers are using AI

AI lowers the barrier for professionals and non-professionals alike to craft sophisticated attacks.

Automated reconnaissance: AI tools can scan public repositories, documentation, and internet-facing devices to assemble attack plans faster than a human attacker.

Weaponized code generation: Adversaries use code-generation models to write exploit scripts, phishing templates, and social-engineering lures. These artifacts often bypass simple pattern detection.

Polished social engineering: LLMs craft believable messages and impersonations that increase click-throughs on phishing campaigns.

Faster exploit chaining: AI helps map CVEs and device-specific weaknesses into automated chains that reach OT devices.

This means defenders must assume that attacks will be faster, more tailored, and often more persistent.

Practical limits and risks of ML in OT

Data quality and label scarcity

ML needs good data. OT telemetry is noisy, sometimes missing, and may vary by shift or season. Labeled attack data in OT is scarce - you cannot train supervised models effectively without staged incidents or synthetic augmentation. Expect false positives and plan for analyst review.

Model drift and explainability

Process changes, firmware updates, and seasonal loads cause model drift. Black-box models can be hard to explain to operators - which reduces trust. Choose models with interpretable outputs or combine ML with rule-based checks so operators can validate suggestions.

Safety-first constraints

You can’t interrupt a running process for the sake of a model update. Any ML deployment must respect safety interlocks and fail-safe behavior. That means ML systems often run in advisory mode first, then gradually move into automated controls after rigorous testing.

Adversarial machine learning

Attackers can try to poison or confuse ML systems by feeding crafted telemetry or timing attacks to blend malicious signals into normal noise. Harden training pipelines and validate incoming feature quality.

Implementation roadmap: from pilot to production (step-by-step)

This is a pragmatic sequence you can follow at a single plant or as a program across multiple sites.

1. Map and prioritise (2–4 weeks)

Inventory critical assets, sensors, and protocols.

Identify processes where an anomaly would cause the highest safety or production impact.

Prioritize one or two high-value pilot use cases (e.g., pump vibration anomaly, remote access anomalies).

2. Data baseline and hygiene (4–8 weeks)

Capture historical telemetry, network flow logs, and engineering change records.

Standardize timestamps, labels, and naming.

Fix obvious logging gaps before model training.

3. Choose the right models and operators (4–12 weeks)

Start with unsupervised/time-series models for anomaly detection. Use explainable methods or statistical baselines where possible.

Run models in parallel with existing detection (advisory mode) - do not let ML drive automated shutdowns during pilot.

4. Validation and human-in-the-loop (4–8 weeks)

Create an analyst review process for every ML finding.

Tune thresholds to reduce false positives while ensuring you catch meaningful deviations.

5. Operationalize and integrate (ongoing)

Feed ML alerts into your SOC/plant operations workflows, playbooks, and ticketing systems.

Automate enrichment: asset context, maintenance windows, and recent change logs.

6. Monitor, retrain, and govern

Retrain models on a scheduled cadence and after major process changes.

Maintain an ML governance plan: who can modify models, where training data lives, and rollback procedures.

This roadmap keeps production first while enabling ML to add value quickly

Engineering considerations & best practices

Start small, validate fast. Deploy lightweight models on a subset of telemetry and measure true positive rate before scaling.

Preserve operator workflows. Present ML outputs in language operators use - tie anomalies to machine IDs, setpoints, and SOPs.

Blend ML with domain rules. Rule-based checks remain crucial; ML should augment, not replace, engineering knowledge.

Secure the ML pipeline. Protect training data, model weights, and the inference pipeline from tampering.

Use synthetic data sensibly. When labeled incidents are rare, well-crafted simulation data helps, but always validate with real telemetry.

Define KPIs that matter. Track mean time to detect (MTTD), mean time to respond (MTTR), and reduction in unsafe process deviations.

Compliance, governance and explainability

Regulators and auditors are increasingly scrutinizing controls that affect critical-infrastructure defense. That means ML systems must be auditable and their decisions explainable. Keep the following practices:

Document training datasets and feature selection. Auditors will want to see provenance for model inputs.

Maintain a model-change log. Track versions, retrain dates, and validation results.

Use conservative automation policies. Only allow automated, safety-impacting actions after documented testing and approvals.

Ensure privacy and data residency. If telemetry contains personally identifiable information (PII), encrypt and manage access.

These steps reduce operational risk and improve audit readiness.

Common pitfalls and how to avoid them

Pitfall: Deploying ML as a magical silver bullet.

Fix: Treat ML as one component of layered defense. Pair it with segmentation, access controls, and hardened devices.Pitfall: Ignoring operator buy-in.

Fix: Involve engineers early; run joint tuning sessions and produce human-readable alerts.Pitfall: No governance for model changes.

Fix: Implement change control, rollback options, and testing on mirrored data.Pitfall: Overlooking adversarial risk.

Fix: Harden ingestion, use anomaly thresholds, and monitor model inputs for poisoning attempts.

How Shieldworkz helps - practical service layers

We translate these technical steps into operational outcomes by combining OT engineering with cybersecurity rigor:

Pilot design and data readiness: We help you choose pilot processes, collect and sanitize telemetry, and build a training pipeline that respects plant constraints.

Model selection and validation: We recommend and validate models suited to your process - focusing on explainability and safety-first deployment.

Human-in-the-loop playbooks: We build response workflows so ML outputs flow into operator dashboards and SOC playbooks you already use.

Threat modeling for AI attacks: We assess adversarial risks (poisoning, evasion), then harden model training and inference paths.

Managed ML operations: For plants without in-house ML staff, we provide managed model upkeep, retraining, and version control so you never operate on stale models.

We aim to deliver measurable improvements in detection speed, fewer false positives, and better alignment with regulatory requirements.

Recap main takeaways:

AI and Machine Learning are powerful tools for OT Security - when applied thoughtfully. They improve detection of subtle process anomalies, enable predictive maintenance, and help analysts triage alerts faster. But ML is not plug-and-play: you must address data quality, governance, explainability, and safety constraints. Meanwhile, attackers are also using AI to scale reconnaissance and craft convincing social engineering, so defenses must evolve accordingly. Recent industry analyses underscore growing OT targeting and the dual role of AI in both defense and offense.

If you want to move from theory to practice, Shieldworkz can help you run a short, low-impact pilot that proves value without disrupting production. Book a free OT Security consultation with Shieldworkz specialists.

Wöchentlich erhalten

Ressourcen & Nachrichten

You may also like

27.02.2026

Building an OT Cybersecurity Program with IEC 62443 and NIST SP 800-82

Team Shieldworkz

25.02.2026

All about the new EU ICT Supply Chain Security Toolbox

Prayukth K V

24.02.2026

AI and NERC CIP-015: Automating Anomaly Detection in Critical Infrastructure

Team Shieldworkz

23.02.2026

Using the IEC 62443 framework to comply with NIST SP 800-82: A CISO's guide

Prayukth K V

20.02.2026

A deep-dive into the Adidas extranet breach

Prayukth K V

17.02.2026

The CIRCIA town halls could be a watershed moment for critical infrastructure

Prayukth K V